IMDEA Networks

News

Your car’s tire sensors could be used to track you

Researchers at IMDEA Networks Institute, together with European partners, have found that tire pressure sensors in modern cars can unintentionally...

Read more arrow_right_altIMDEA Networks researchers recognized as Distinguished Members of IEEE INFOCOM 2026 Technical Program Committee

Researchers from the IMDEA Networks Institute have been recognized as Distinguished Members of the Technical Program Committee (TPC) of IEEE...

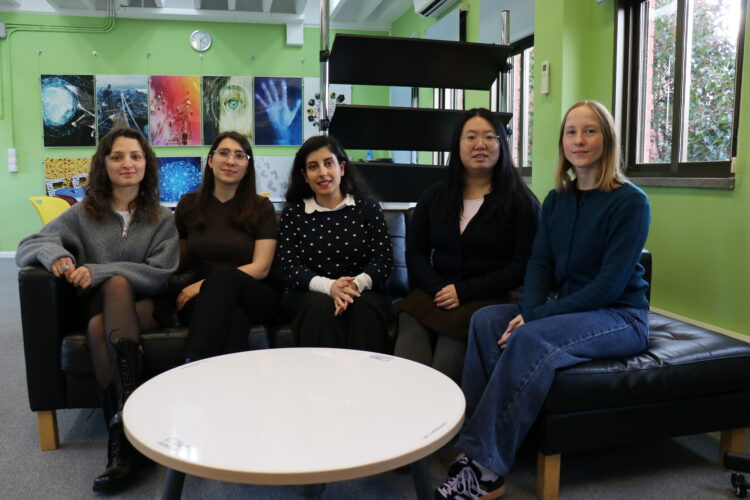

Read more arrow_right_altWomen in STEM: Stories to inspire the next generation

As part of the International Day of Women and Girls in Science, we interviewed five of our researchers to learn...

Read more arrow_right_altIMDEA Networks deploys cutting-edge smart lamppost with 5G millimeter-wave and WiFi 7 technology with NEXTONIC partners

IMDEA Networks Institute has successfully deployed a state-of-the-art smart lamppost at its outdoor facilities in Leganés, Madrid, marking a significant...

Read more arrow_right_altMLEDGE project proves federated learning can support real-world AI services

After two and a half years of work, the MLEDGE project (Cloud and Edge Machine Learning), led by Professor Nikolaos...

Read more arrow_right_altIMDEA Networks strengthens its leadership in 6G research with new infrastructures funded by NextGenerationEU funds

IMDEA Networks has significantly strengthened its research capabilities in advanced 5G and future 6G networks through the execution of the...

Read more arrow_right_alt“IMDEA Networks is a nice mix of a strong work ethic and a collaborative spirit”

Lucianna Kiffer, Research Assistant Professor at IMDEA Networks and head of the Distributed Systems and Networks Group, shares in this...

Read more arrow_right_altIMDEA Networks opens its laboratories to FP students in an outreach day

Around 60 vocational training students from IES San Juan de la Cruz and Colegio Valle del Miro visited three of...

Read more arrow_right_altIMDEA Networks creates a secure watermarking tool to protect institutional data

The European project DataBri-X, which began in October 2022, has recently concluded, achieving a key milestone: ensuring that data can...

Read more arrow_right_altAn IMDEA Networks team participates in the 2025 Quantum Internet Alliance Hackathon

An IMDEA Networks team is taking part, within the framework of the regional project MADQUANTUM-CM (funded by Comunidad de Madrid...

Read more arrow_right_alt

Recent Comments