IMDEA Networks

Research

Welcome to Edge Networks Group!

Our research focus is on the design of edge networked systems to support emerging applications from the Cyber-Physical Systems and the Internet of Things. We investigate novel performance metrics and algorithms to cater to the requirements of sensing/collecting (data), communication/offloading, and actuation/inference for these applications. Our primary goal is to achieve low delay, and low energy consumption for edge devices (operating over wireless), by only collecting useful data from the environment with which an application interacts, thereby also reducing the bandwidth requirements both over the wireless and the core networks. The topics of current interest include (but are not limited to): Hierarchical Inference, Inference Scheduling Age of Information (AoI), and Wireless Communications.

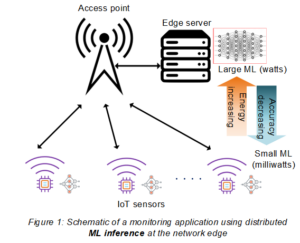

Efficient ML Inference at the Edge

In the era of Edge AI, i.e., the confluence of edge computing and artificial intelligence, an increasing number of applications at the network edge are using Machine Learning (ML) inference, in particular Deep Neural Networks (DNN) inference. The state-of-the-art DL models that achieve close to 100% inference accuracy are large requiring gigabytes of memory to load them. On the other end of the spectrum, the tinyML community is pushing the limits of compressing DL models to embed them on memory-limited IoT devices. Performing local inference for data samples on the end devices reduces delay, saves network bandwidth, and improves the energy efficiency of the system, but it suffers in terms of low QoE as the small-size DL models have low inference accuracy. To reap the benefits of doing local inference while not compromising on the inference accuracy, we explore inference offloading techniques and the recently proposed Hierarchical Inference (HI) framework, wherein the local inference is accepted only when it is correct, otherwise, the data sample is offloaded. Using these techniques we study the three-way trade-off between inference accuracy, delay, and total system energy consumption in Edge AI.

- V. N. Moothedath, J. P. Champati and J. Gross, “Getting the Best Out of Both Worlds: Algorithms for Hierarchical Inference at the Edge,” in IEEE Transactions on Machine Learning in Communications and Networking (TMLCN), 2024.

- Adarsh Prasad Behera, Roberto Morabito, Joerg Widmer, and Jaya Prakash Champati, Improved Decision Module Selection for Hierarchical Inference in Resource-Constrained Edge Devices, MobiCom (short paper) 2023.

- Ghina Al-Atat, Andrea Fresa, Adarsh Prasad Behera, Vishnu Narayanan Moothedath, James Gross, and Jaya Prakash Champati, The Case for Hierarchical Deep Learning Inference at the Network Edge, NetAI workshop, MobiSys 2023.

- Andrea Fresa, Jaya Prakash Champati, Offloading Algorithms for Maximizing Inference Accuracy on Edge Device Under a Time Constraint, IEEE TPDS, 2023.

AoI Analysis and Optimization

AoI is a freshness metric that measures the time elapsed since the generation time of the freshest packet available at the Receiver. In contrast to system delay, AoI increases linearly between the packet receptions through which it accounts for the frequency of sampling the Information Source. We analyze AoI for fundamental queueing systems and also study optimal sampling and transmission strategies for minimizing AoI in these systems.

- Jaya Prakash Champati, Hussein Al-Zubaidy, James Gross. Statistical Guarantee Optimization for AoI in Single-Hop and Two-Hop FCFS Systems with Periodic Arrivals. IEEE Transactions on Communications. 69 – 1, pp. 365 – 381. 2021.

- Jaya Prakash Champati, Ramana R. Avula, Tobias J. Oechtering; James Gross. Minimum Achievable Peak Age of Information Under Service Preemptions and Request Delay. IEEE Journal on Selected Areas in Communications. 39 – 5, pp. 1365 – 1379. 2021.

- Jaya Prakash Champati and Hussein Al-Zubaidy and James Gross, “On the distribution of AoI for the GI/GI/1/1 and GI/GI/1/2* systems: Exact expressions and bounds”, in Proc. IEEE INFOCOM, May 2019.

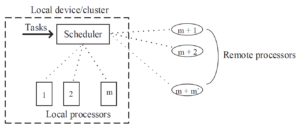

Edge Computing Offloading Algorithms

Edge computing or fog computing, where computational resources are placed close to (e.g. one hop away) entities that offload computational tasks or data for processing, is a key architectural component of 5G and future wireless networks. Offloading computational tasks from mobile devices to edge servers instead of the cloud results in internet bandwidth savings and circumvents the long delays involved in communicating the data load of the offloaded tasks to a cloud data centre residing somewhere on the internet. Above all, edge computing augments the compute and memory limitations of edge devices.

- Jaya Prakash Champati and Ben Liang. Single Restart with Time Stamps for Parallel Task Processing with Known and Unknown Processors. IEEE Transactions on Parallel and Distributed Systems. 31 – 1, pp. 187 – 200. 2020.

- Jaya Prakash Champati and Ben Liang. Semi-Online Algorithms for Computational Task Offloading with Communication Delay. IEEE Transactions on Parallel and Distributed Systems. 28 – 4, pp. 1189 – 1201. 2017.

Transient Delay Analysis and Optimization

![]()

Most of the research dealing with general network performance analysis using queuing theory consider systems in steady-state. For example, for simple M/M/1 or more general Markovian queuing systems, the steady-state is governed by the (conceptually simple) flow balance equations. In contrast, transient analysis of these systems results in intractable differential equations. Using Stochastic Network Calculus, we derived the end-to-end delay violation probability for a sequence of time-critical packets, given the transient network state (queue backlogs) when the time-critical packets enter the network. Leveraging this analysis we compute good resource allocation strategies for wireless protocols such as WirelessHART to support the QoS requirements of time-critical industrial applications.

- Jaya Prakash Champati, Hussein Al-Zubaidy, James Gross. Transient Analysis for Multi-hop Wireless Networks Under Static Routing. IEEE/ACM Transactions on Networking. 28 – 2, pp. 722 – 735. 2020.